Apple Privacy-Preserving Machine Learning Workshop 2022

Earlier this year, Apple hosted the Privacy-Preserving Machine Learning (PPML) workshop. This virtual event brought Apple and members of the academic research communities together to discuss the state of the art in the field of privacy-preserving machine learning through a series of talks and discussions over two days.

In this post we will introduce a new dataset for community benchmarking in PPML, and share highlights from workshop discussions and recordings of select workshop talks.

Introducing the Federated Learning Annotated Image Repository (FLAIR) Dataset for PPML Benchmarking

Significant advances in machine learning (ML) over the last decade have been driven in part by the increased accessibility of both large-scale computing and training data. By competing to improve standard benchmarks on datasets like ImageNet, the community has discovered an array of improved techniques, optimizations, and model architectures that are broadly applicable and of proven value.

The PPML field would benefit from adopting standard datasets and tasks for benchmarking advances in the field. Benchmarks serve at least two important roles:

- Focusing research efforts on what the community has identified as important challenges

- Providing a common, agreed-upon method to measure and compare privacy, utility, and efficiency techniques

The datasets and tasks of any proposed PPML benchmark must represent real-world problems, and capture aspects that might complicate PPML training like class imbalance and label errors. They must permit training models that balance strong privacy guarantees with high-enough accuracy to be practically useful. And ideally they would be a fit for large-scale and nonlinear models, simple convex models, and training regimes with differing amounts of supervision. Most importantly, proposed PPML benchmarks must support the evaluation of models trained in a federated setting where training is distributed across user devices.

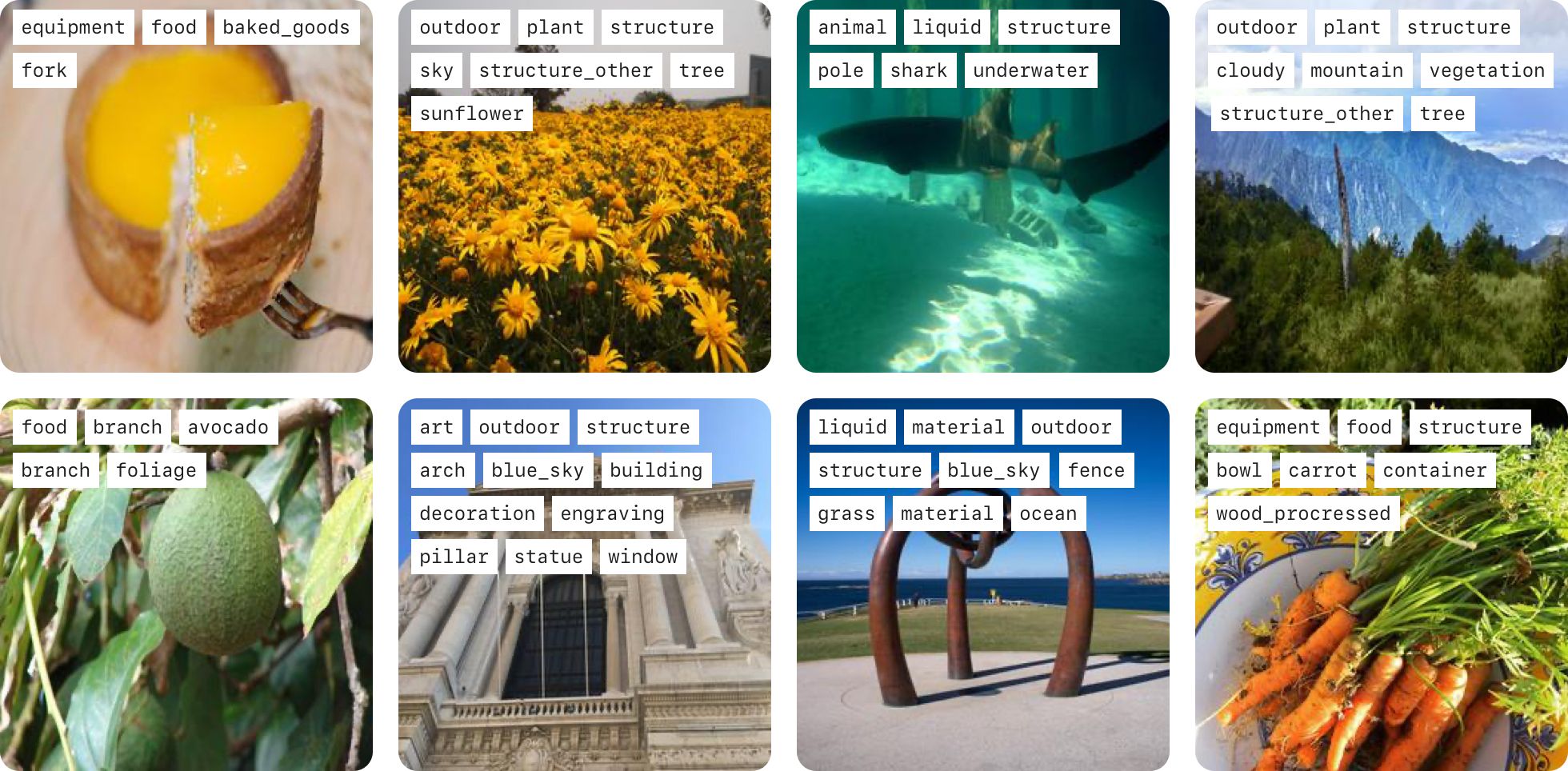

FLAIR is a dataset of 429,078 Flickr images grouped by 51,414 users that accurately captures a number of the characteristics encountered in PPML tasks. These images have been graded by humans and labeled using a taxonomy of approximately 1,600 labels. All objects present in the images have been labeled, and images may have multiple labels. Because the taxonomy is hierarchical, researchers can easily vary the complexity of benchmark and ML tasks they construct using the dataset.

ML with Privacy

A central subject of the workshop was federated learning with differential privacy. Many practical use cases would benefit from algorithms that can handle data heterogeneity, as well as better optimization methods for private federated learning. These topics were discussed in Virginia Smith’s talk (Carnegie Mellon University), Privacy Meets Heterogeneity. Several future directions, open questions, and challenges in applying PPML in practice were highlighted in Kunal Talwar’s (Apple) talk, Open Questions in Differentially Private Machine Learning.

An in-depth discussion of meaningful privacy guarantees for language models was discussed in Florian Tramèr’s talk (ETH Zurich) What Does it Mean for a Language Model to Preserve Privacy? Tramèr highlighted the need for revisiting the assumptions of existing techniques to ensure that users’ expectations of privacy are not violated. There was also a fruitful discussion about membership inference attacks, which represent one approach for using trained models to understand user data privacy. Nicholas Carlini (Google) added to this discussion with his talk, Membership Inference Attacks from First Principles.

ML with Privacy Videos

Membership Inference Attacks from First Principles

Presented by Nicholas Carlini

Open Questions in Differentially Private Machine Learning

Presented by Kunal Talwar

Privacy Meets Heterogeneity

Presented by Virginia Smith

What Does it Mean for a Language Model to Preserve Privacy?

Presented by Florian Tramèr

Learning Statistics with Privacy

Attendees discussed many critical topics on learning statistics with privacy. Large-scale deployment of differential privacy (DP) was highlighted in Ryan Rogers’ paper (LinkedIn) Practical Differentially Private Top-k Selection with Pay-what-you-get Composition. Attendees discussed budget management schemes and accumulation of privacy loss when querying the same database multiple times.

Attendees also reviewed low-communication DP algorithms and other related works, including Jelani Nelson’s work (UC Berkeley, Google) on new algorithms for private frequency estimation that improves on the performance of other schemes such as PI-RAPPOR. Participants discussed learning unknown domains with DP both in central and local models.

Finally, we discussed the optimal DP algorithms for certain basic statistical tasks, specifically the paper by Gautam Kamath (University of Waterloo), Efficient Mean Estimation with Pure Differential Privacy via a Sum-of-Squares Exponential Mechanism.

Trust Models, Attacks, and Accounting

Another area explored at the workshop was that of understanding the system-level view of risks to users’ data privacy requires modeling the entire data processing pipeline and the different levels of trust that users may have in the components of the pipeline. For example, the pipeline may include code that processes the data on the phone; a server that performs, aggregates, and anonymizes; and a server that stores the final results of the analysis. Different levels of trust can be translated into different privacy requirements for individual components of the system. Specific systems that were discussed during the breakout session included shuffling, aggregation, and secret sharing.

Another system-wide concern is privacy risks resulting from publication and storage of results of multiple data analyses performed on the data of the same user. Understanding such risks requires strengthening existing privacy accounting techniques as well as developing new approaches to privacy accounting. The attendees discussed relevant work in this area, including the paper Tight Accounting in the Shuffle Model of Differential Privacy by workshop attendee Antti Honkela (University of Helsinki).

Better understanding of privacy risks requires developing new potential attacks on privacy. The paper Auditing Private Machine Learning by Alina Oprea (Northeastern University) proposes new tools for deriving tighter bounds on the privacy loss due to publishing of intermediate models output by stochastic gradient descent.

Trust Models, Attacks, and Accounting Videos

Other Primitives

Finally, the workshop discussed cryptographic primitives that can allow for implementation of differentially private algorithms without relying on a trusted data curator. Shuffling or aggregation is a primitive that has been shown in recent years to allow for a large class of differentially private algorithms to be implemented. For example, a trusted shuffler can allow the researcher to compute private histograms with accuracy that nearly matches the central model.

Vitaly Feldman (Apple) explored this topic in his talk, Low-Communication Algorithms for Private Federated Data Analysis with Optimal Accuracy Guarantees. Workshop attendees discussed the question of implementing this primitive and compared the efficiency and trust assumptions of various approaches to building a shuffler in terms of efficiency and trust assumptions. Although shuffling is useful, there are private computations that may not be implemented in the shuffle model without losing accuracy. In principle, some simple primitives are universal for secure multiparty computation. The attendees discussed the question of finding primitives that can be practically implemented and allow for efficient implementations of other differentially private mechanisms, as referenced in works such as Mechanism Design via Differential Privacy by Kunal Talwar (Apple).

Other Primitives Videos

Workshop Resources

Related Videos

Related Papers

Acknowledgments

Many people contributed to this work including Kunal Talwar, Vitaly Feldman, Omid Javidbakht, Martin Pelikan, Audra McMillan, Allegra Latimer, Niketan Pansare, Roman Filipovich, Eugene Bagdasaryan, Congzheng Song, Áine Cahill, Jay Katukuri, Aditya Arora, Frank Cipollone, Borja Rodríguez-Gálvez, Filip Granqvist, Riyaaz Shaik, Matt Seigel, Noyan Tokgozoglu, Chandru Venkataraman, David Betancourt, Ben Everson, Nima Reyhani, Babak Aghazadeh, Kanishka Bhaduri, and John Akred.

Let’s innovate together. Build amazing privacy-preserving machine-learned experiences with Apple. Discover opportunities for researchers, students, and developers by visiting our Work With us page.

Related readings and updates.

At Apple, we believe privacy is a fundamental human right. It’s also one of our core values, influencing both our research and the design of Apple’s products and services.

Understanding how people use their devices often helps in improving the user experience. However, accessing the data that provides such insights — for example, what users type on their keyboards and the websites they visit — can compromise user privacy. We develop system…

A Survey on Privacy from Statistical, Information and Estimation-Theoretic Views

September 21, 2021research area Privacyconference IEEE BITS the Information Theory Magazine

The privacy risk has become an emerging challenge in both information theory and computer science due to the massive (centralized) collection of user data. In this paper, we overview privacy-preserving mechanisms and metrics from the lenses of information theory, and unify different privacy metrics, including f-divergences, Renyi divergences, and differential privacy, by the probability likelihood ratio (and the logarithm of it). We introduce…