Controlling Language and Diffusion Models by Transporting Activations

Large generative models are becoming increasingly capable and more widely deployed to power production applications, but getting these models to produce exactly what’s desired can still be challenging. Fine-grained control over these models’ outputs is important to meet user expectations and to mitigate potential misuses, ensuring the models’ reliability and safety. To address these issues, Apple machine learning researchers have developed a new technique that is modality-agnostic and provides fine-grained control over the model’s behavior with negligible computational overhead, while minimally impacting the model’s abilities. Activation Transport (AcT) is a general framework to steer activations guided by optimal transport theory that generalizes many previous activation-steering works. The work will be presented as a Spotlight at ICLR 2025, and code is available here.

To help generative models produce output that aligns with their users’ expectations, researchers often rely on reinforcement learning with human feedback (RLHF) or instruction fine-tuning, but these approaches are resource-intensive and become increasingly impractical as models grow in complexity. In addition, changing a model’s parameters can have unintended consequences, affecting its overall performance on other tasks.

To control the output of these generative models, users often try crafting precise prompts, but while this is more accessible, it offers limited controls. Even with carefully constructed prompts, a model’s output can be unpredictable and lacking the nuance a user might need. For example, it’s common for models to fail when prompted with instructions not to include something (see figure 1):

In many applications, such as content generation, creative writing, or even AI-assisted design, having fine-grained control over the model’s output is crucial. For example, a user might want to adjust the tone of text without altering its content, change the style of generated images while maintaining their context, or to ensure sensitive topics are handled with care, without compromising overall coherence. Activation Transport (AcT) delivers this, without the computational overhead, complexity, and volume of data required by RLHF or fine-tuning, and with more reliable results than prompt engineering.

Activation Steering: A simple-yet-powerful solution

The computational and economic cost of fine-tuning large models as well as the need for fine-grained control has motivated research on targeted interventions upon model activations to modify specific behaviors in a fine-grained way. The main advantage of these “activation steering” methods is that they do not require back-propagation and typically can be merged into the model weights.

Unlike RLHF or fine-tuning, activation steering doesn’t impact a model’s parameters, but instead leverages an understanding of the model’s operations to dynamically steer its outputs in a desired direction at inference time.

Prior approaches to activation steering have used a vector-based intervention that take the source activations of expert neurons, and shift them toward a learned target (see figure 2). However, the degree to which activations are shifted is controlled by an unbounded model-level parameter (λ\lambda), making interpretable interventions challenging. Additionally, these interventions can shift activations out of distribution, meaning that the shifted activations can end up far from what the model has learned to expect during training, resulting in a disruption of the model’s natural dynamics, which can lead to unexpected behavior and reduced performance.

Activation Transport (AcT) and Linear-AcT

Activation Transport (AcT) is a novel intervention framework that surpasses the prior limitations of activation steering by accounting for distributions of source and target activations, and using an interpretable and actionable strength parameter for fine-grained control.

AcT learns the Optimal Transport (OT) map between the source and target activation distributions. This ensures that an activation transported from the source will comply with the target distribution, minimizing the compounding effects on the dynamics of the model itself. To estimate the OT map, a small number of example sentences (e.g., hundreds) are run through the model, revealing the sets of activations for both source (e.g., impolite language) and target (e.g., polite language).

Estimating a multi-dimensional and possibly non-linear OT map would require a prohibitive amount of data, and its inference would slow down the overall LLM text generation. Therefore, we make two simplifications: (1) we consider independent activations, which allows estimating a 1D map per neuron and (2) we consider linear maps, limiting the memory footprint and ensuring fast inference. The steering with linear and independent transports is coined LinearAcT (see figure 3).

Linear-AcT works out-of-the-box for LLMs as well Text-to-Image (T2I) diffusion models, achieving strong results in both cases. This is the first work to propose a conditioning algorithm that can be used without any modification for language and image generation.

Controlling LLM Outputs with Linear-AcT

To demonstrate Linear-AcT’s effectiveness in controlling LLMs’ outputs, we benchmarked its performance on two important tasks: toxicity mitigation and truthfulness induction, while monitoring how other performance indicators are affected via the perplexity (PPL) and MMLU metrics.

We tested Linear-AcT on toxicity mitigation on Gemma-2-2b and Llama-3-8b (evaluated using the RealToxicityPrompts dataset), obtaining a 7.5x and 4.3x reduction respectively.

| Best λ | Toxicity mitigation | PPL | MMLU | |

| Original | 13.98 | 53.1 | ||

| ActAdd | 0.5 | 1.1x | 14.69 | 53.0 |

| AurA | - | 2.0x | 14.18 | 53.0 |

| ITI-c | 8.0 | 5.6x | 14.90 | 52.6 |

| Linear-AcT | 1.0 | 7.5x | 14.79 | 51.3 |

| Best λ | Toxicity mitigation | PPL | MMLU | |

| Original | 9.06 | 65.3 | ||

| ActAdd | 0.3 | 1.0x | 9.71 | 65.5 |

| AurA | - | 3.1x | 9.52 | 65.5 |

| ITI-c | 3.0 | 3.6x | 9.48 | 64.7 |

| Linear-AcT | 1.0 | 4.3x | 9.56 | 64.5 |

We tested truthfulness induction (using the TruthfulQA dataset), showing a 4.9x and 7.5x increase for each model.

| Best λ | Truthfullness (MC1 acc) | MMLU | |

| Original | 21.05 | 53.10 | |

| ActAdd | 3.0 | 23.01 | 52.83 |

| AurA | - | 21.20 | 52.73 |

| ITI-c | 2.0 | 24.53 | 51.39 |

| Linear-AcT | 1.0 | 26.00 | 51.47 |

| Best λ | Truthfullness (MC1 acc) | MMLU | |

| Original | 25.46 | 65.35 | |

| ActAdd | 0.7 | 26.19 | 65.42 |

| AurA | - | 25.34 | 65.37 |

| ITI-c | 2.0 | 30.11 | 64.71 |

| Linear-AcT | 1.0 | 33.22 | 64.78 |

Controlling Text-to-Image Diffusion Models with Linear-AcT

Text-to-image diffusion models (T2Is) are powerful tools that allow users to generate stunning images from simple text descriptions. Fine-grained control, however, is challenging; for instance, it is impossible to enforce incremental changes—such as adding more trees or making the style slightly more cartoonish—or to reliably predict how terms like ‘many trees’ will affect the generated image.

Because AcT can transport activations from one distribution to another no matter the model architecture or modality, it can enable fine-grained control over details like this for T2Is (see figure 4). To do this, AcT needs only a list of prompts that describe the source (e.g., no people) and the target (e.g., people) activation distributions.

AcT for Fine-Control of Pictorial Style

AcT also enables control over the artistic style of generated images. To demonstrate this, we appended stylistic tags (e.g., anime, cyberpunk, watercolor) to a subset of prompts from the COCO Captions dataset (32 prompts per concept).

In this setup, the original prompts serve as the source distribution, while the style-modified prompts represent the target distribution. By learning OT maps between these distributions, and by applying those maps through the interpretable strength parameter, AcT gains the ability to induce just the right amount of a desired style (see figure 5).

Do Not Think About a Pink Elephant

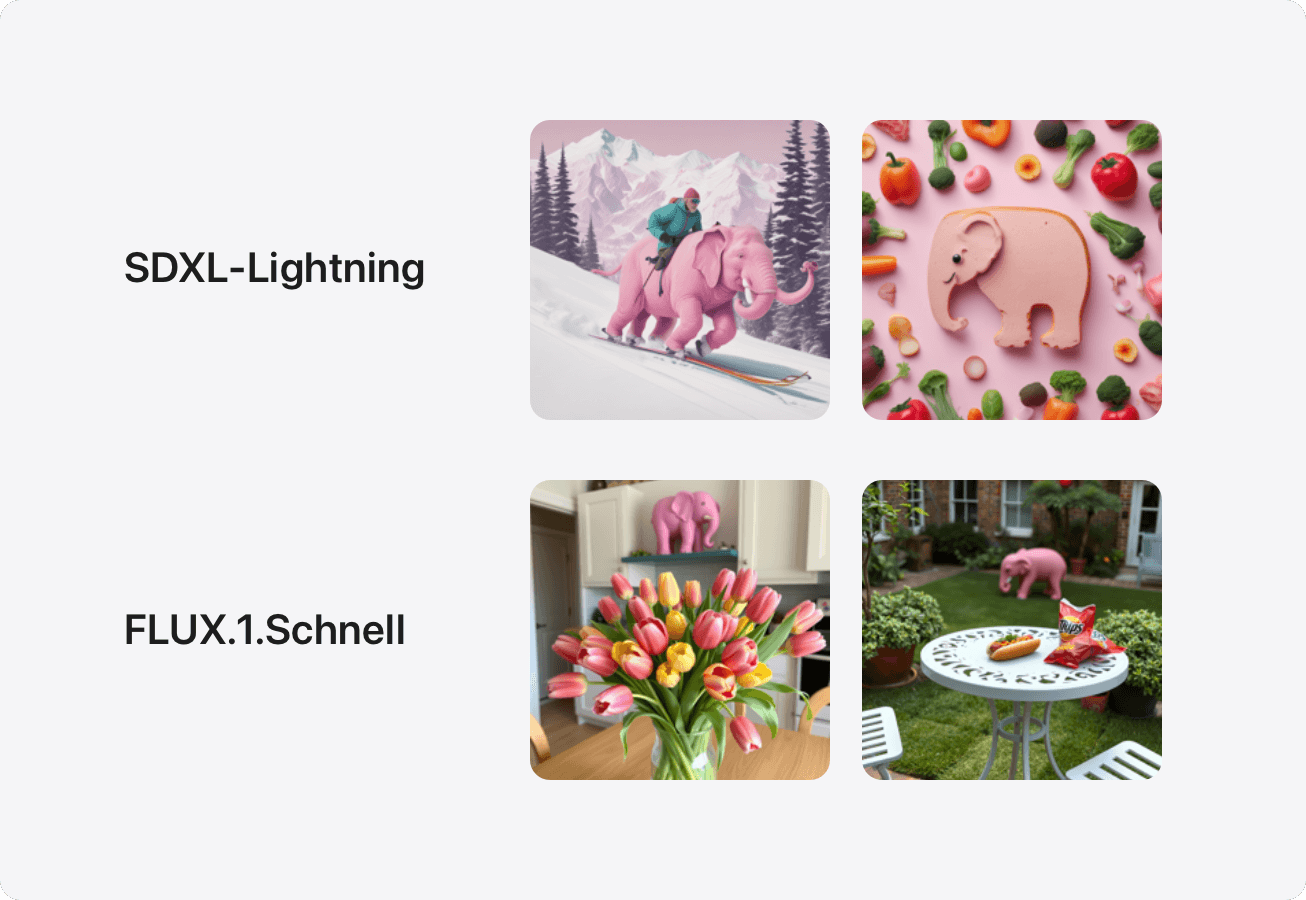

The header above will likely cause readers to think about a pink elephant - interestingly, the same phenomenon has been found to happen to T2Is (see figure 6). This may happen if T2Is’ text encoders embed sentences as a bag of words and is not powerful enough to interpret negations, but it’s often the case that including a negative instruction in the prompt (e.g., “do not show a pink elephant”) will in fact lead a T2I model to include that undesired element in the image.

Linear-AcT is an intervention that can address this challenge and remove undesired concepts from generated images. To do this, a set of source prompts that contain the concept to be removed (e.g. “a house and a tree with a pink elephant next to it”) is needed, as well as a set of target prompts where the concept is not present (e.g. “a house and a tree”). The main idea is to “isolate” the concept to be removed as the main difference between the sets of prompts. Linear-AcT learns a transport map from the source to the target set, resulting in a controllable intervention that can remove a negated concept (like “pink elephant”) that otherwise would have been included in the generated model, as shown in figure 7.

Conclusion

As the capabilities and production deployments of generative language and text-to-image models continue to grow, providing fine-grained controls over their outputs is increasingly important. While common approaches like RLHF and instruction fine-tuning have been effective in improving the alignment of LLM output with users’ expectations, these methods are resource intensive, and become impractical as models grow in complexity. Activation Transport (AcT) provides fine-grained control of the output of LLMs and T2I diffusion models, without the limitations of RLHF and fine-tuning. This general framework for steering activations according to optimal transport theory is modality-agnostic and requires negligible computational overhead, and researchers and practitioners can now use and build on AcT with the code available here.

Related readings and updates.

LinEAS: End-to-end Learning of Activation Steering with a Distributional Loss

November 3, 2025research area Fairness, research area Methods and Algorithmsconference NeurIPS

The growing use of generative models in daily life calls for efficient mechanisms to control their generation, to e.g., produce safe content or provide users with tools to explore style changes. Ideally, such mechanisms should require low volume of unpaired data (i.e., without explicit preference), and should be cheap, both at train and inference time, while preserving output quality. Recent research has shown that such mechanisms can be obtained…

Controlling Language and Diffusion Models by Transporting Activations

January 14, 2025research area Methods and Algorithmsconference ICLR

The increasing capabilities of large generative models and their ever more widespread deployment have raised concerns about their reliability, safety, and potential misuse. To address these issues, recent works have proposed to control model generation by steering model activations in order to effectively induce or prevent the emergence of concepts or behaviours in the generated output. In this paper we introduce Activation Transport (AcT), a…